If you haven’t read my recent post about Bronitoring, you probably don’t know what this tool is about. So, it’s super simple!

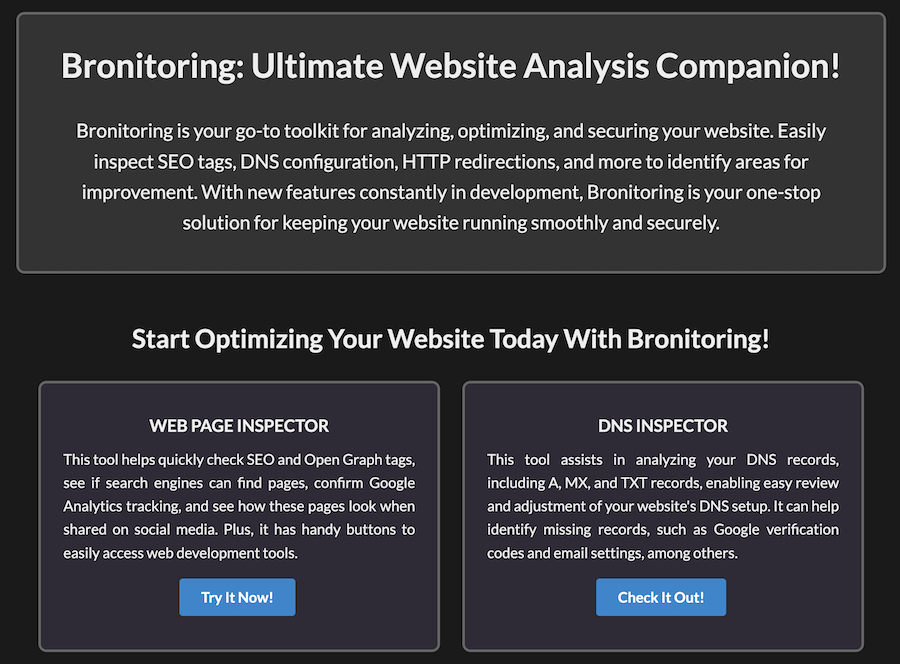

Bronitoring is your go-to toolkit for analyzing, optimizing, and securing your website. Easily inspect SEO tags, DNS configuration, HTTP redirections, and more to identify areas for improvement. With new features constantly in development, Bronitoring is your one-stop solution for keeping your website running smoothly and securely.

24 days have passed since my public Bronitoring launch on ProductHunt. We got 25 upvotes and a bunch of supporters. I want to say thank you to everyone who helped me with this launch, as well as testing the product and providing useful feedback. Thank you, guys!

What’s New?

Bronitoring is now not only a single-page analyzer but a set of tools to help you work on your own (or your customers’) websites.

Here is a short list of changes:

- Updated Sitemap Inspector

- Simplified Page Inspector

- Added robots.txt Inspector

- Added DNS Inspector

- Added HTTP Redirections Inspector

- Added SSL Certificate Inspector

- Updated the landing page

Sitemap Inspector

Initially, when I started working on Bronitoring a few months ago, sitemaps were analyzed inside the Page Inspector, which was not good in terms of performance. So, I extracted it into a single tool, but it was awful and useless in the first version.

Here is how I see this tool:

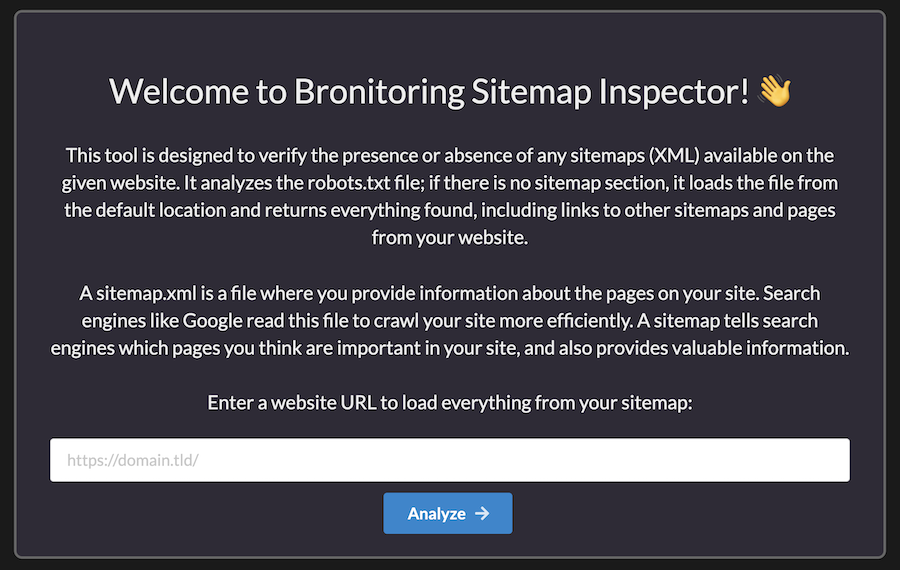

This tool is designed to verify the presence or absence of any XML sitemaps available on the given website. A sitemap tells search engines which pages you think are important on your site and also provides valuable information.

What Sitemap Inspector Should Do

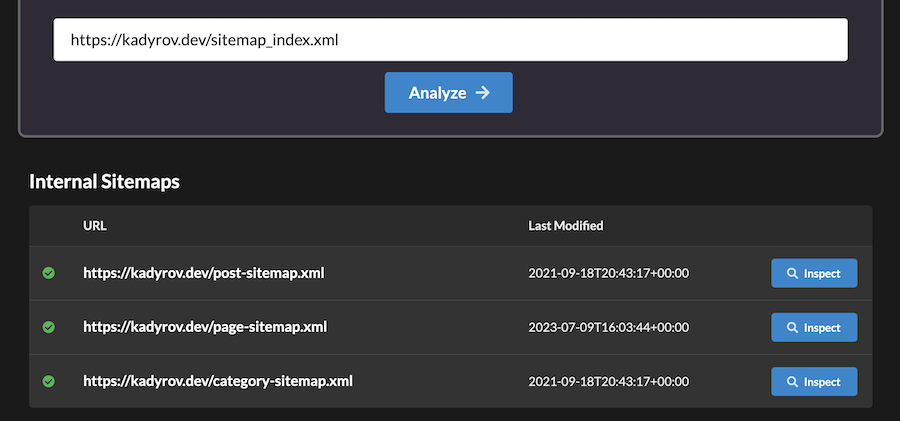

I wanted to have a tool that not only lists pages from the sitemap on your website but also analyzes the entire content inside the index sitemap, as well as internal sitemaps with metadata.

After analyzing more than 100 websites since the ProductHunt launch, I found a bunch of websites that don’t have sitemaps at all, or their sitemaps were in an invalid format. Of course, this will affect your search appearance because search engines will not work with missing or broken files.

The first version just grabbed the first sitemap, analyzed its content, and iterated until it reached the first 100 pages. Then it stopped and returned this list without any useful information. It was more like getting a list of available pages from a website. Not enough for me.

What kind of errors does it check now?

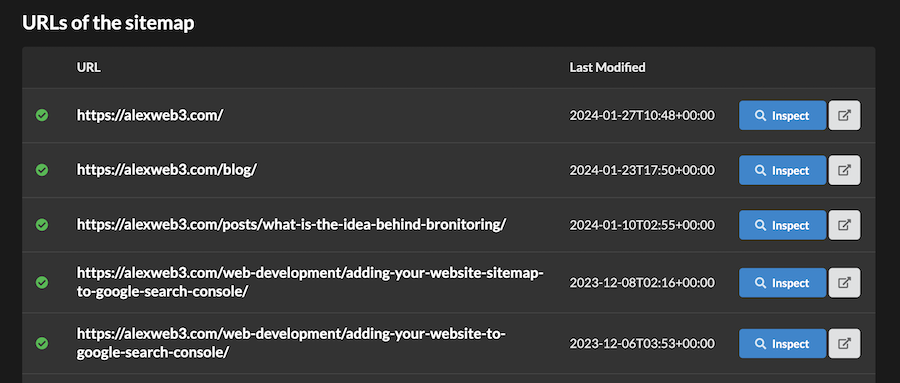

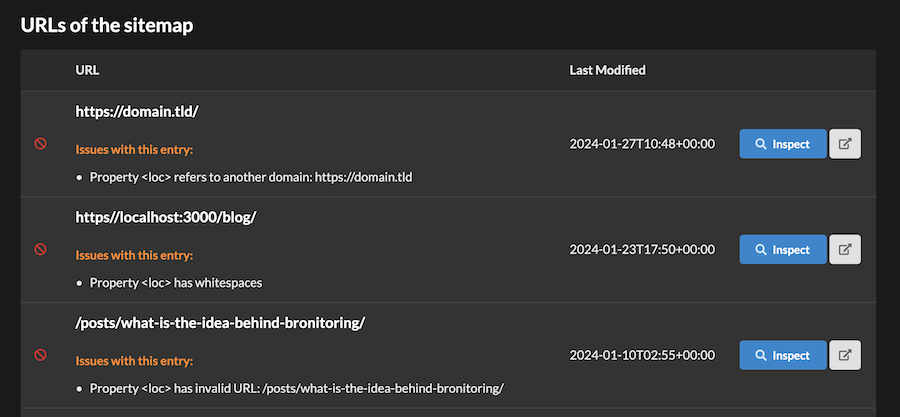

Now Sitemap Inspector can analyze almost everything:

- Valid XML markup

- Emptiness of a specific URL

- Valid last modification date

- Whitespaces in URL locations

How Does It Work?

It has two modes: analyzing robots.txt first, or analyzing a sitemap by a given URL.

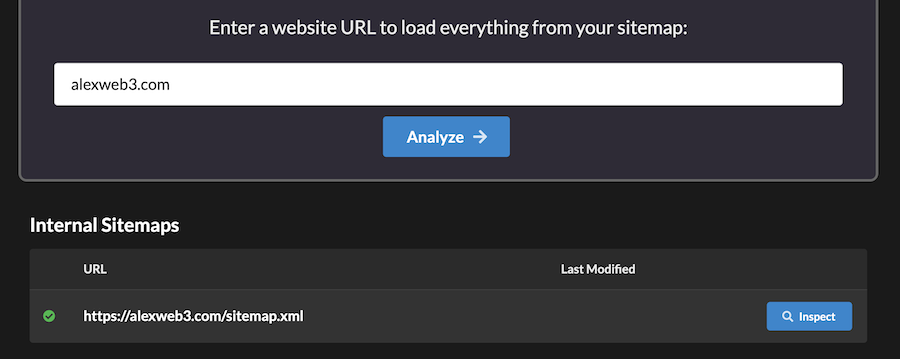

If you enter only your domain name, it starts with loading your robots.txt and parsing sitemap locations from this file. If there are any – it returns a list of available sitemaps but doesn’t analyze them.

If your website doesn’t have a robots.txt, this tool tries to load the sitemap from its default location (/sitemap.xml or /sitemap_index.xml).

When you click the “Inspect” button, it will perform an analysis of this file and return everything from inside.

If the Sitemap Inspector detects any errors, you will see everything in this interface. For example, empty or invalid URLs, invalid modification dates, etc.

If you want to analyze a specific post or page from your website, you can click “Inspect” and analyze it using the Page Inspector. You will be redirected to another tool. I love it!

Test your sitemap: https://bronitoring.alexweb3.com/sitemap-inspector

New Robots.txt Inspector

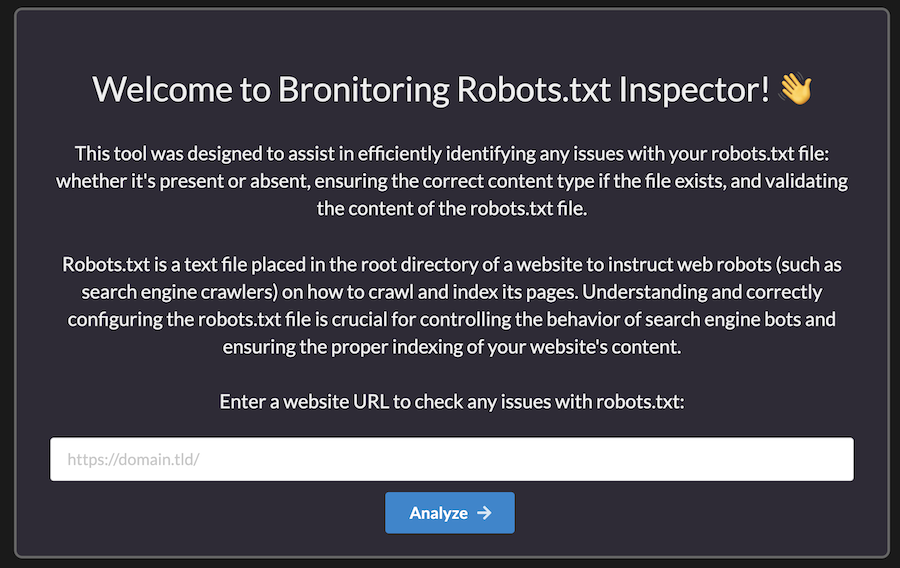

If there are many websites that don’t have valid sitemaps, I thought that they don’t have robots.txt either. So, why not build another tool to help us detect such issues?

Here is my marketing message explaining how this inspector would work:

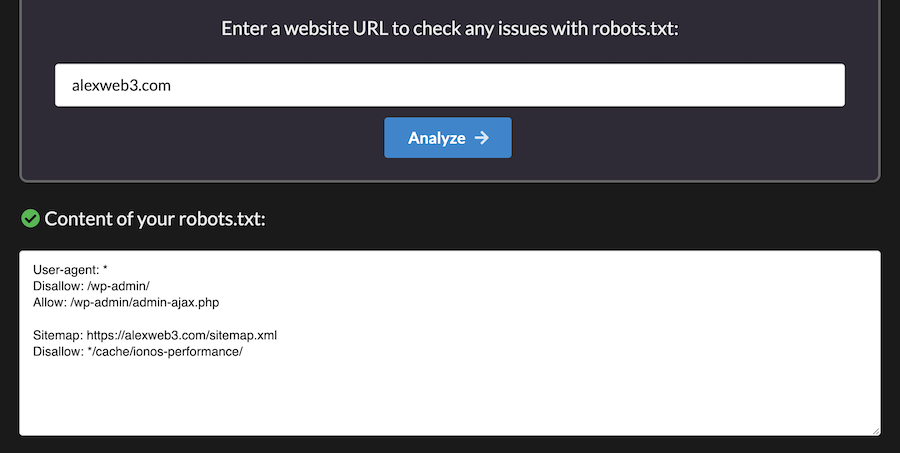

This inspector was designed to assist in efficiently identifying any issues with your robots.txt file: whether it’s present or absent, ensuring the correct content type if the file exists, and validating the content of the robots.txt file. Everything you need in one place.

At the moment of writing, the Robots.txt Inspector doesn’t show any real issues, like invalid rules or something like that. It just checks the presence or absence of the robots.txt for a given website and returns its content.

New HTTP Redirections Inspector

The next tool helps us analyze invalid configurations on the web server side. Every website must have an HTTPS certificate. But it’s not enough if your web server is not directing users from the HTTP version to HTTPS.

Even more, it’s important to have valid responses for non-existing pages when people request a page that doesn’t exist on your website.

The HTTP Redirections Inspector was created to identify incorrect or missing HTTP redirects, including HTTP to HTTPS, www to non-www versions of your website, and vice versa. Additionally, it checks the correctness of responses for 404 pages on your website.

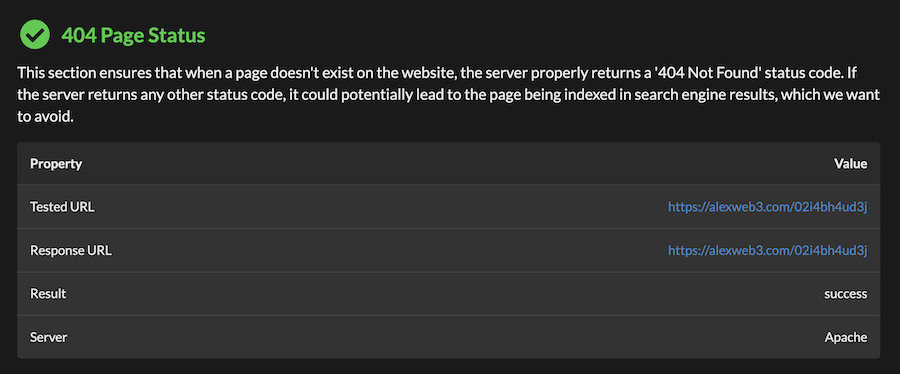

Testing 404 pages

I saw many websites that don’t properly handle the 404 status code for non-existing pages. This tool can identify such errors.

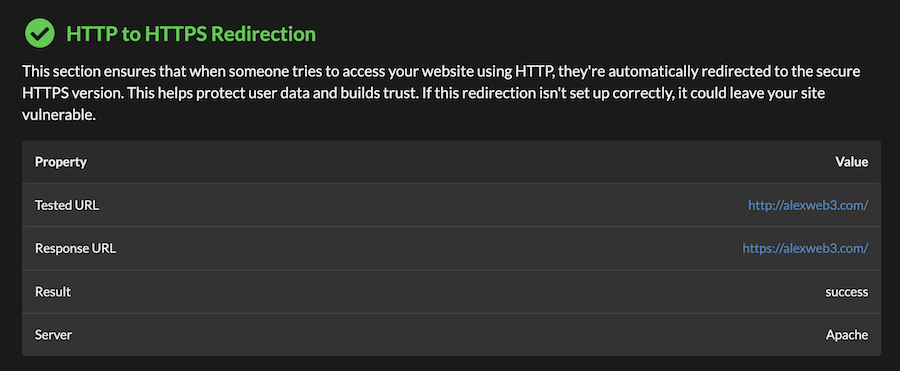

Testing HTTP to HTTPS redirects

Another useful test available on this tool is checking valid redirections from HTTP to HTTPS versions. It’s crucial for SEO to avoid duplicate websites.

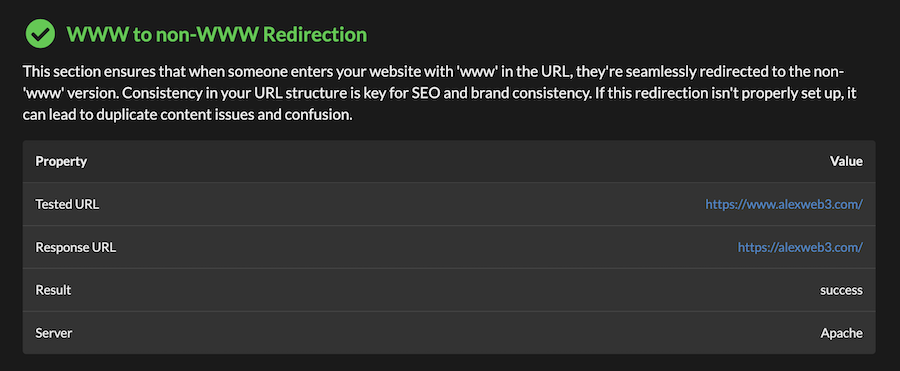

Testing WWW to non-WWW redirects

And the last part of this inspector is analyzing redirects from www to non-www domains and vice versa. Your website must have only one valid domain, and your web server must manage such redirection properly.

New DNS Inspector

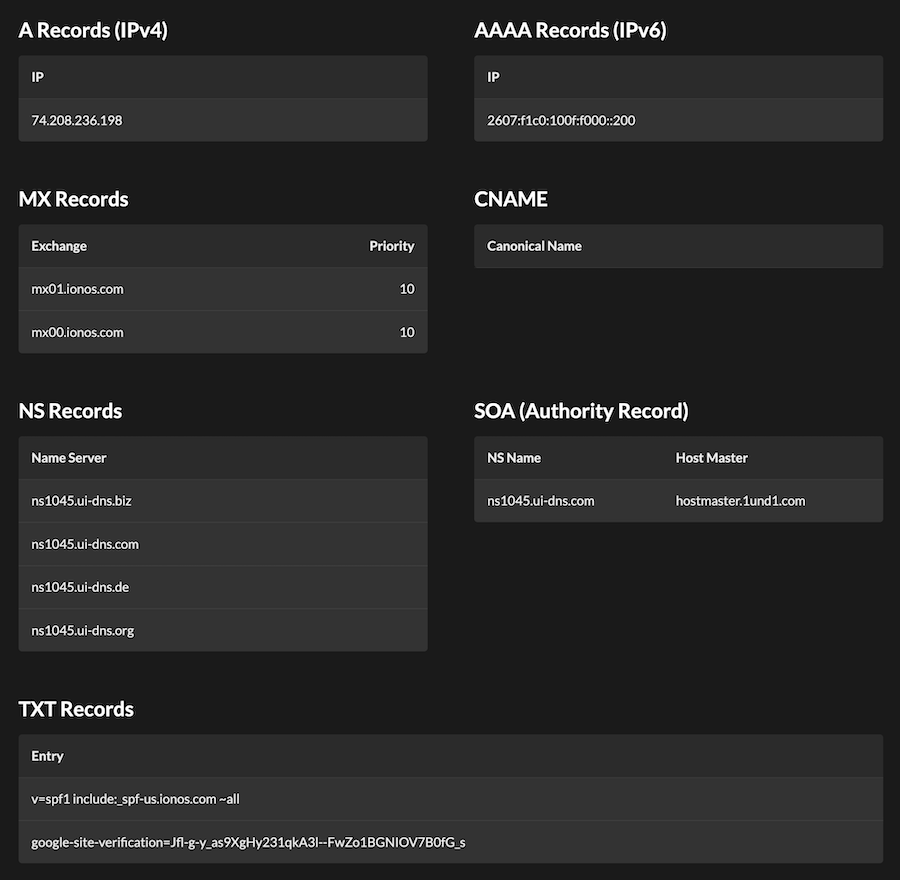

I wanted to have a tool that can help me find any missing records in DNS. At the moment of writing, the DNS Inspector just displays everything found about your domain name.

This tool assists in analyzing your DNS records, including A, MX, and TXT records, enabling easy review and adjustment of your website’s DNS setup. It can help identify missing records, such as Google verification codes and email settings, among others.

Why do I need this tool?

So, from time to time, I need to make changes to my domain settings, and I forget which DNS server manages my domain. Now I can find it easily and go directly to my panel for making any changes.

In the next release, I want to have a more visually appealing interface.

New SSL Certificate Checker

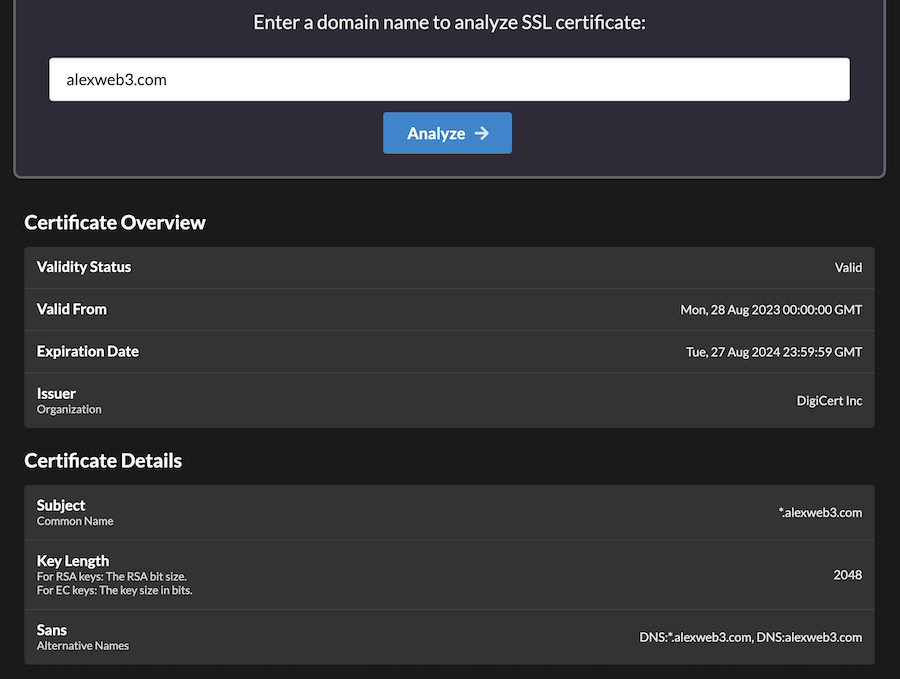

This tool gives easy-to-understand information about the SSL certificate issued for a given domain. It checks details like validity period, issuer, and encryption strength. Whether a certificate is present or not, you’ll get clear insights to keep your website secure.

Frankly speaking, it seems like a useless tool at this moment because there are many other tools that provide us with the same information, but I don’t want to google it every time I need to check my customers’ SSL certificates.

Conclusion

Bronitoring is in an active development stage, and I do my best to make it better every week. There are plenty of other tools I want to build:

- HTTP Headers Inspector

- External Links Collector

- Email Security Assessment

- Broken Links Checker

- Contacts Detector

- Domain Blocklist Checker

I hope I will be able to manage everything on my own 😀

0 Comments